A lot of work has been done to greatly clarify and enhance various environment variables in the Apache Hadoop shell script code. One of those places was in the usage of various _USER environment variables.

Prior to 3.0.0-alpha4

In previous releases, the supported variables were:

| Name | Description |

|---|---|

| HADOOP_SECURE_DN_USER | User to run a secure, privileged-start DataNode |

| HADOOP_PRIVILEGED_NFS_USER | User for secure, privileged-start NFS services |

This inconsistency leads to a lot of confusion. Why is it using ‘DN’ instead of ‘DATANODE’ like HADOOP_DATANODE_OPTS? If all of the YARN environment variables start with YARN, why don’t these start with HDFS? Why is one ‘SECURE’ and the other ‘PRIVILEGED’?

Key reasons are a) historical and b) the lack of consistent guidelines in how things should be named. Given that 3.0.0 allows the community to break with backward compatibility combined with some new guidelines when writing shell script code, it gives an opportunity to clean this up as well as add new functionality.

Secure User Consistency

While the old names are still supported (although there will be a deprecation warning), the new names highlight consistency:

| Old | New |

|---|---|

| HADOOP_SECURE_DN_USER | HDFS_DATANODE_SECURE_USER |

| HADOOP_PRIVILEGED_NFS_USER | HDFS_NFS_SECURE_USER |

In fact, the code has been generalized such that any new privileged user that is required will follow the same (command)_(subcommand)_SECURE_USER pattern.

New Functionality: User Restrictions

What about non-secure situations? Using the same pattern, Hadoop can now put artificial restrictions on which users may execute which commands. All that needs to be configured is an environment variable following the (command)_(subcommand)_USER pattern. This is particularly useful for daemons. Here’s a small sampling:

| Name | Description |

|---|---|

| HADOOP_KMS_USER | KeyManagementServer User |

| HDFS_DATANODE_USER | Insecure DataNode user, User to launch secure DataNode (i.e., root) |

| HDFS_HTTPFS_USER | HTTPFS Proxy User |

| HDFS_NAMENODE_USER | NameNode user |

| HDFS_SECONDARYNAMENODE_USER | NameNode user |

| MAPRED_HISTORYSERVER_USER | MapReduce JobHistory Server user |

| YARN_NODEMANAGER_USER | NodeManager User |

| YARN_RESOURCEMANAGER_USER | ResourceManager User |

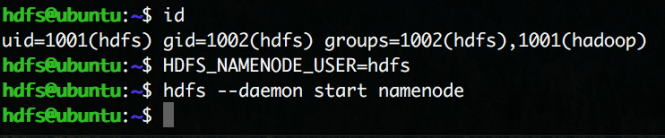

For example, if I limit the NameNode to only run as the hdfs user:

The NameNode fails to launch when I try to launch it as myself. However, if I do the same action as the hdfs user:

It succeeds.

The user restriction code works for every Apache Hadoop subcommand:

Since this feature is only checking environment variables, it isn’t super secure and circumvented easily. However, it does help prevent accidentally running a daemon as the wrong user.

New Feature: User Switching

Using the per-user environment variables, it can now prevent one of the more common mistakes when launching daemons. Admins would log into root, start the NameNode, and go on their way. Later NameNode executions would cause permissions errors when executed as the correct user. While not a fatal mistake, it was annoying. By piggybacking off of the user restriction code, the system will now properly switch to the correct user:

As we can see from this example, there were no processes running as the hdfs user. We then launch the NameNode. Again, we verify the processes running as hdfs and see the NameNode process. This works for all types of daemons:

Returning Feature: start-all and Friends

Apache Hadoop 0.20.203 added security support. One of the changes to make it secure was to launch the DataNode as root. In secure mode, single users should not be used to start daemons. As a result, the start-all command was disabled to prevent admins from doing just that. Thanks to the above functionality, however, start-all is back!

As we can see above, start-all.sh fired up the NameNodes (either SecondaryNameNode or an HA-NN configuration), DataNodes, ResourceManager(s), and NodeManagers, locally and remotely. It does require proper ssh configuration as well as the correct USER variables defined. In this particular example, this is running on a single node, and the SecondaryNameNode’s variable isn’t set, thus causing the Abort error above.

Let’s verify what is running as hdfs and what is running as yarn:

Here, we can see we have the NN, DN, RM, and NM as expected! Bonus: the DataNode process was launched via jsvc, indicative of a secure DataNode!

Bigger Impacts

One of the big benefits of having the user switching code innate to the base distribution of Apache Hadoop is that it greatly simplifies any additional code that do-it-yourselfers or vendors will have to write. As it is part of the shared community source, it will get tested and a higher level of scrutiny and verification than any individual organization.

The other big win is to consider is the impact on technologies such as Docker. It should now be significantly easier to build an Apache Hadoop cluster in a single container for development and testing purposes. Just set the environment variables and go.

Here’s hoping these new features make your environment easier to manage and more productive!